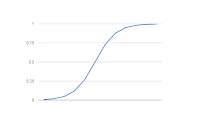

A FORMAL INTRO TO MACHINE LEARNING USING LOGISTIC REGRESSION FROM SCRATCH

how it does that?...well that's math!! for you...yes, literally it figure it out using mathematics. What kind of it??? well for starters if you ever had any math class in your university or school...that'll do! Ok that being said we gonna learn little bit of ML(machine learning). especially the one called supervised learning.

Supervised Learning : is a kind of method telling the computer what to do whether than how to do..in technical term we call it as specifying "LABELS" for prediction...

Say we have a data on a phone parts like camera, storage, ram, 5G or 4G, brand (apple, Samsung, etc..) and their prices. we need to predict the price of a new phone based on specs from our previous data on specs and brand.here the features(camera, storage, ram, 5G or 4G, brand (apple, Samsung, etc..) and the Target(Prices).

The logic regression(LOGISTIC REGRESSION) is kind of algorithm in machine learning called Regression problem. First we need data....i'm using MNIST data in this data we need to split data into training and test(80% to train and 20% to test). The mnist is data set of hand written images from 0 to 9 in csv format of pixel data of 28*28 images. More about mnist link. so total 784 input data for Nueral Network here.

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

data = pd.read_csv('mnist.csv')

data = np.array(data)

m, n = data.shape

np.random.shuffle(data)

#making the data on data_dev and transposing it for easy access

data_dev = data[0:6000].T

#making all labels stored as y for calculations

Y_dev = data_dev[0]

#making all X data stored for calculations

X_dev = data_dev[1:n]

#diving with 255 makes numbers as 0 to 1 inbetween values

X_dev = X_dev / 255.

data_train = data[6000:m].T

Y_train = data_train[0]

X_train = data_train[1:n]

X_train = X_train / 255.

_,m_train = X_train.shape

#for making the weights and baises values

def init_params():

W1 = np.random.rand(10, 784) - 0.5

b1 = np.random.rand(10, 1) - 0.5

W2 = np.random.rand(10, 10) - 0.5

b2 = np.random.rand(10, 1) - 0.5

return W1, b1, W2, b2

def ReLU(Z):

return np.maximum(Z, 0)

def softmax(Z):

A = np.exp(Z) / sum(np.exp(Z))

return A

def forward_prop(W1, b1, W2, b2, X):

Z1 = W1.dot(X) + b1

A1 = ReLU(Z1)

Z2 = W2.dot(A1) + b2

A2 = softmax(Z2)

return Z1, A1, Z2, A2

def ReLU_deriv(Z):

return Z > 0

def one_hot(Y):

#fancy way of saying make matrix of 784,10

one_hot_Y = np.zeros((Y.size, Y.max() + 1))

#fancy way of saying 0:5999, y value

one_hot_Y[np.arange(Y.size), Y] = 1

one_hot_Y = one_hot_Y.T

return one_hot_Y

def backward_prop(Z1, A1, Z2, A2, W1, W2, X, Y):

one_hot_Y = one_hot(Y)

dZ2 = A2 - one_hot_Y

dW2 = 1 / m * dZ2.dot(A1.T)

db2 = 1 / m * np.sum(dZ2)

dZ1 = W2.T.dot(dZ2) * ReLU_deriv(Z1)

dW1 = 1 / m * dZ1.dot(X.T)

db1 = 1 / m * np.sum(dZ1)

return dW1, db1, dW2, db2

def update_params(W1, b1, W2, b2, dW1, db1, dW2, db2, alpha):

W1 = W1 - alpha * dW1

b1 = b1 - alpha * db1

W2 = W2 - alpha * dW2

b2 = b2 - alpha * db2

return W1, b1, W2, b2

def get_predictions(A2):

return np.argmax(A2, 0)

def get_accuracy(predictions, Y):

print(predictions, Y)

return np.sum(predictions == Y) / Y.size

def gradient_descent(X, Y, alpha, iterations):

W1, b1, W2, b2 = init_params()

for i in range(iterations):

Z1, A1, Z2, A2 = forward_prop(W1, b1, W2, b2, X)

dW1, db1, dW2, db2 = backward_prop(Z1, A1, Z2, A2, W1, W2, X, Y)

W1, b1, W2, b2 = update_params(W1, b1, W2, b2, dW1, db1, dW2, db2, alpha)

if i % 10 == 0:

print("Iteration: ", i)

predictions = get_predictions(A2)

print(get_accuracy(predictions, Y))

return W1, b1, W2, b2

W1, b1, W2, b2 = gradient_descent(X_train, Y_train, 0.10, 500)

def make_predictions(X, W1, b1, W2, b2):

_, _, _, A2 = forward_prop(W1, b1, W2, b2, X)

predictions = get_predictions(A2)

return predictions

def test_prediction(index, W1, b1, W2, b2):

current_image = X_train[:, index, None]

prediction = make_predictions(X_train[:, index, None], W1, b1, W2, b2)

label = Y_train[index]

print("Prediction: ", prediction)

print("Label: ", label)

current_image = current_image.reshape((28, 28)) * 255

plt.gray()

plt.imshow(current_image, interpolation='nearest')

plt.show()

test_prediction(0, W1, b1, W2, b2)

test_prediction(1, W1, b1, W2, b2)

test_prediction(2, W1, b1, W2, b2)

test_prediction(3, W1, b1, W2, b2)

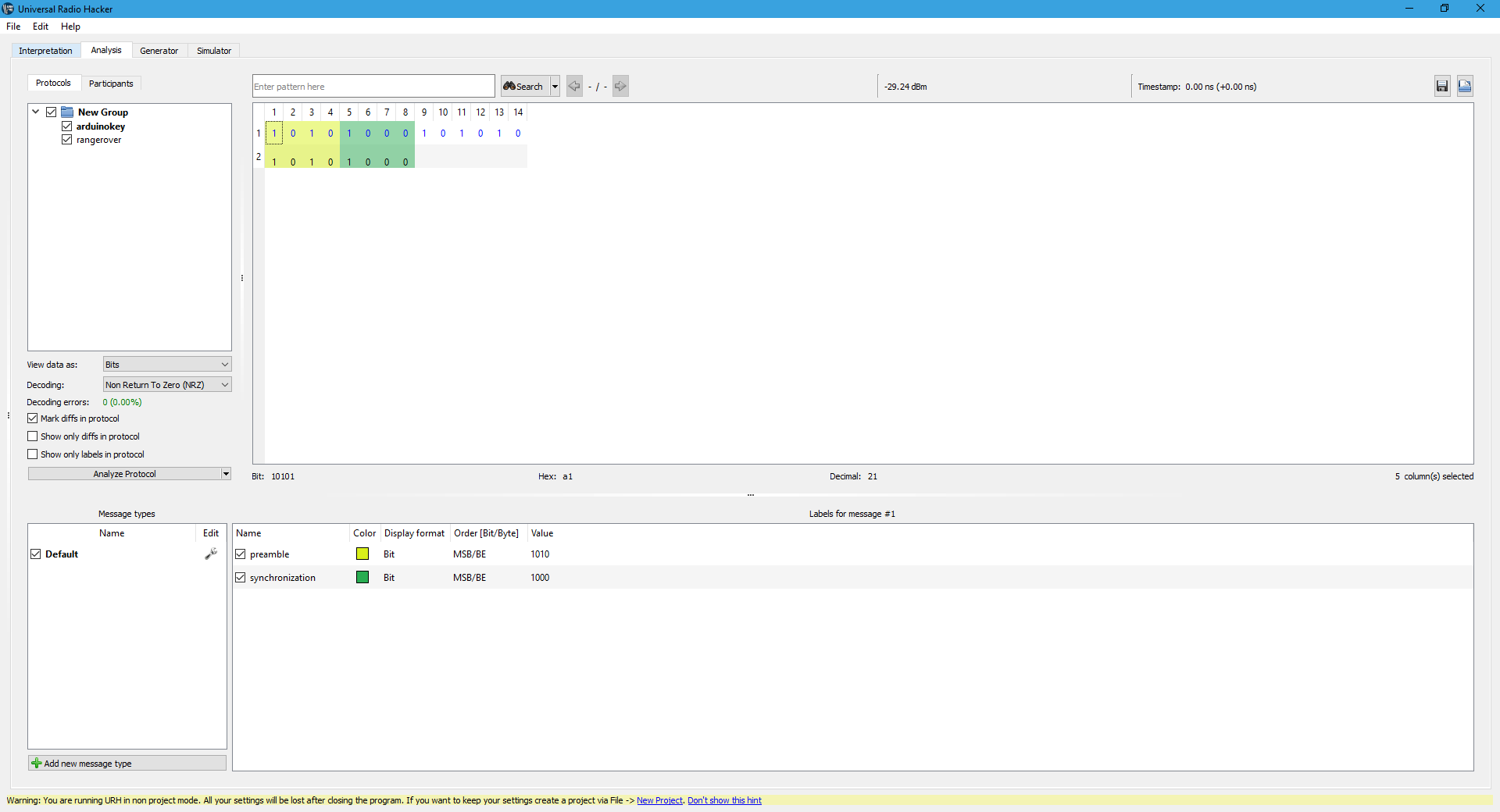

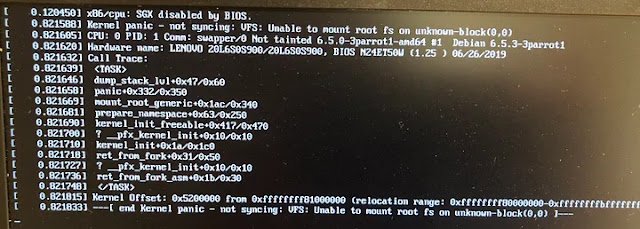

Iteration: 0

[3 1 1 ... 1 1 3] [2 8 8 ... 5 6 0]

0.12961111111111112

Iteration: 10

[7 4 6 ... 2 0 7] [2 8 8 ... 5 6 0]

0.1825

Iteration: 20

[7 4 6 ... 5 0 7] [2 8 8 ... 5 6 0]

0.24507407407407408

Iteration: 30

[7 4 6 ... 8 1 7] [2 8 8 ... 5 6 0]

0.3013888888888889

Iteration: 40

[0 2 3 ... 8 1 0] [2 8 8 ... 5 6 0]

0.3474814814814815

Iteration: 50

[0 2 3 ... 8 1 0] [2 8 8 ... 5 6 0]

0.3875925925925926

Iteration: 60

[0 2 3 ... 8 1 0] [2 8 8 ... 5 6 0]

0.43164814814814817

Iteration: 70

[0 2 3 ... 8 1 0] [2 8 8 ... 5 6 0]

0.4744259259259259

Iteration: 80

[0 2 3 ... 8 1 0] [2 8 8 ... 5 6 0]

0.5147037037037037

Iteration: 90

[0 2 3 ... 5 1 0] [2 8 8 ... 5 6 0]

0.5485

Iteration: 100

[0 2 3 ... 5 1 0] [2 8 8 ... 5 6 0]

0.5779814814814814

Iteration: 110

[0 2 3 ... 5 1 0] [2 8 8 ... 5 6 0]

0.6021851851851852

Iteration: 120

[0 2 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.6239074074074074

Iteration: 130

[0 2 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.6423333333333333

Iteration: 140

[0 2 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.6590185185185186

Iteration: 150

[0 2 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.6735

Iteration: 160

[0 2 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.6866481481481481

Iteration: 170

[0 2 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.6987592592592593

Iteration: 180

[0 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7098518518518518

Iteration: 190

[0 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7205555555555555

Iteration: 200

[0 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.729037037037037

Iteration: 210

[0 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7376111111111111

Iteration: 220

[2 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7456851851851852

Iteration: 230

[2 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.753

Iteration: 240

[2 4 3 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7595555555555555

Iteration: 250

[2 4 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.765962962962963

Iteration: 260

[2 4 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.771537037037037

Iteration: 270

[2 4 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7766851851851851

Iteration: 280

[2 4 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7813333333333333

Iteration: 290

[2 4 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7853518518518519

Iteration: 300

[2 4 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7896851851851852

Iteration: 310

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7942407407407407

Iteration: 320

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.7982407407407407

Iteration: 330

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8014074074074075

Iteration: 340

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8048518518518518

Iteration: 350

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8079259259259259

Iteration: 360

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8107222222222222

Iteration: 370

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8132777777777778

Iteration: 380

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8157037037037037

Iteration: 390

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8181296296296297

Iteration: 400

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8203518518518519

Iteration: 410

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8231666666666667

Iteration: 420

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8256481481481481

Iteration: 430

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.827962962962963

Iteration: 440

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8297777777777777

Iteration: 450

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8318703703703704

Iteration: 460

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.833462962962963

Iteration: 470

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8345925925925926

Iteration: 480

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8363518518518519

Iteration: 490

[2 8 8 ... 5 6 0] [2 8 8 ... 5 6 0]

0.8379444444444445

Prediction: [2]

Label: 2

Prediction: [8]

Label: 8

Prediction: [8]

Label: 8

Prediction: [4]

Label: 4

[Finished in 161.4s]

Comments

Post a Comment